Introduction

Sentence splitting is the process of separating free-flowing text into sentences. It is one of the first steps in any natural language processing (NLP) application, which includes the AI-driven Scribendi Accelerator. A sentence splitter is also known as as a sentence tokenizer, a sentence boundary detector, or a sentence boundary disambiguator.

At first glance, the process seems fairly simple: insert a sentence break after every period, question mark, or exclamation point. However, abbreviations ending with periods—like any other word—are more often sentence-internal than final. Abbreviations are also an open-ended set, and a sentence splitter must be able to recognize domain-specific abbreviations when they occur with a final period. Moreover, a question mark or exclamation point can exist at the end of a fragment embedded within a sentence instead of terminating it.

The Accelerator performs grammatical error correction (GEC) of English texts, also identifying errors in spelling, syntax, and punctuation. It analyzes complete sentences, and the quality of its output depends on having correct sentence boundaries. If a sentence boundary is not detected, then the Accelerator will be presented with two joined sentences as one. This will negatively affect things like subject-object coordination. However, if a false sentence boundary is detected (e.g., after an abbreviation), the Accelerator will be forced to work with two truncated sentence fragments.

The Accelerator currently uses an off-the-shelf sentence splitter, NLTK Punkt, and we wanted to determine whether another technology, or combination of technologies, could do a better job. We ran Punkt with a list of 55 common abbreviations, and it detected sentence boundaries with a 96.59% accuracy rate (a 3.41% error rate) on a corpus of Scribendi-edited texts. The errors included both Type 1 and Type 2 errors: spuriously detected false sentence breaks and undetected correct sentence breaks, respectively.

The Type 1 errors that occurred were mostly false sentence breaks after abbreviations and personal initials, but there were also several after the periods in ellipses. Several abbreviations appeared multiple times in the errors, indicating that the system did not recognize them but viewed them as sentence-ending words.

Many Type 2 errors occurred when the system failed to split sentences ending with quotation marks after the final period. There were also several cases of sentence-ending abbreviations and personal initials that were falsely considered sentence-internal abbreviations. In contrast to the Type 1 errors, these appeared to be known abbreviations that the system could not always detect as sentence-ending when that was exceptionally the case.

The goal of this project was to improve on these sentence boundary disambiguation (SBD) results, achieving an error rate of less than 1% on Scribendi-edited data. We chose to use our own data because it has a fairly wide domain coverage and because it is the domain in which we use sentence splitters. There were enough systematic errors to suggest that we could do much better by fixing punctuation problems, increasing the coverage of the training data, improving abbreviation coverage, and improving or adding heuristics. The plan was to evaluate several sentence splitters, apply fixes as necessary, and compare the results. We also considered combining the results of multiple sentence splitters by exploiting the relative strengths of each.

Related Work

There have been a few survey studies on sentence splitter systems. Most notably, Read et al. (2012) evaluated nine sentence splitters on a total of seven corpora. They view SBD as the general problem of finding all “boundary points” in text, which includes separations after headers, paragraphs, titles, list items, and pre-formatted text. This is in addition to the conventional SBD definition of separating sentences at sentence-terminating punctuation.

Finding the breaks after headers, etc. is the process of document boundary detection, while the conventional definition of SBD (splitting punctuated sentences) is the detection of sentence boundaries. In rich texts like Word documents, the document structure elements are normally indicated by markup, and separating them at their boundaries is trivial. But separating these elements in unmarked plain text is a problem. We used the conventional SBD definition and considered document boundary detection a separate problem that does not exist in properly marked up text.

Table 2 in the article by Read et al. shows the results of applying sentence splitters to four formal corpora that contain little or no markup. Some of the results were negatively affected because of a failure to find the document boundaries. Recognizing this, the authors experimented with splitting the corpora at paragraph boundaries. Thus, the splitters were not presented with paragraph boundaries and could not generate errors there. Some sentence splitters do not recognize Unicode quotation marks, so they experimentally replaced these characters with ASCII punctuation characters to see if this improved the results. The best results from these experiments are shown in Table 3, and the improved scores are more compatible with the conventional definition of the SBD process. However, Read et al. did not mention compensating for other document boundary elements like headings and list items, so there could still be document boundary errors remaining.

The averaged F1 score for Punkt in Table 3 is 97.3%. In the following section of this blog post, “Adaptations for Punkt,” we describe how we compensated for the problem of Unicode punctuation handling by fixing the tokenizer. The processing also skips over document elements like headers. The error score is 4.67%, evaluated on the Scribendi sentence corpus (Table 2 of this blog post). The corresponding F1 score is 97.3%, the same as in Table 3. This is, of course, a coincidence because both the corpora and the handling of the document structure were different, but both represent the results of applying Punkt to corpora that it was not trained on, without the addition of a list of abbreviations.

Most of the results in Table 3 cluster around the Puntk results: the top six results are in the narrow range of 97.2% to 97.6%, which, by extrapolation, corresponds to an error score of 4% or greater. This is in contrast with section 2 of the article by Read et al., which summarizes the results of seven SBD research papers, reporting error scores between 0.2% and 2.1% with the majority under 1%.

The research papers were all evaluated using the well-known Brown and WSJ corpora. The high scores can be explained in part by fact that they were trained on the same corpora and that all the abbreviations were known. Using correct corpus training/testing splits cannot compensate for the fact that these systems were trained and tested on the same relatively narrow domains.

Read et al.’s article continues by evaluating three more informal corpora from Wikipedia and Web blogs. The results are posted in Table 4. The B columns show the improved results when eliminating document structure errors, but unfortunately, the treatment was different from that used in Table 3. Here, the authors put paragraph boundaries around things like headings and list items, which was not done for the results presented in Table 3. The results for the Wikipedia corpus are better than those of the combined scores of the older corpora presented in Table 3, but as Read et al. admit, the difference is probably due to the different handling of document structure elements.

The B results in Table 4 decrease as the informality of the corpora increases. This could be explained by the fact that the modern, informal text deviates more from the older and more formal corpora that the sentence splitters were trained on.

The conclusion that can be drawn from the paper by Read et al. is that strong SBD results can only be expected for sentence splitters trained on the same domain and that also have knowledge of the domain’s abbreviations. In their conclusions, Read et al. show an interest in experimenting with domain-adapted lists of abbreviations.

More recently, Grammarly published a blog post in 2014 comparing the results of seven sentence splitters. The best result had a 1.6% error rate after evaluating a single corpus. They lowered this from 2.6% by retraining on 70% of the corpus and testing on the other 30% as well as applying some heuristics in post-processing. There was no mention of abbreviation lists. See the section titled Comparison with the Grammarly Blog Post “How to Split Sentences” for a comparison of the final results of this project.

The Scribendi Sentence Corpus

In theory, sentence splitter performance can be evaluated on any text source that has indicated sentence boundaries. An example is the English sides of parallel texts used for the training and testing of statistical machine translation systems. A well-known example is the Europarl corpus of European parliament proceedings consisting of parallel texts in 11 languages, described in Koehn (2005).

Corpora for statistical machine translation need to be very large in order to build a good representation of the relation between the source and destination languages. In the Europarl corpus, the size is one million sentences per language. The source and destination language texts need to be aligned by paragraph and then by sentence. Section 2 of Koehn (2005) describes the process, which uses sentence splitters to find the sentence boundaries.

To use the English side of an aligned pair requires a manual review of the automatically determined sentence boundary positions because they must be much more accurate than the 1% error rate we are expecting to obtain from the sentence splitters we are evaluating. Because of this exacting requirement, we were not able to find any open-source corpora, aside from the Brown and WSJ corpora, that could be used without a manual review and repair process. Instead, to evaluate the candidate sentence splitters, we created a text corpus from a small sample of Scribendi-edited texts and then created an evaluation program to determine the error rate of each technology.

We built the corpus from random selections of a random sample of documents that were submitted and edited during 2018. By sampling over a whole year, we expected to get a good statistical sample of various document types. Taking selections from 3% of the edited documents gave us a corpus file of around 50,000 sentences.

The corpus was built using plain text extracted from word processing documents. The results were processed line by line and separated by line feeds. A normal paragraph came out as a long line consisting of several sentences, each ending with sentence-terminating punctuation. However, the resulting lines were sometimes shorter when they consisted of single-sentence paragraphs, titles, or headers.

To create a sentence corpus from this raw data, it was necessary to indicate the sentence boundaries. We developed a tool to find the likely sentence boundaries and mark the ambiguous cases for manual verification.

The most straightforward way of constructing the sentence corpus would have been to heuristically break each paragraph into sentences and save them as one sentence per line. However, this structure would have been unwieldy during the subsequent manual review process, which involves adding or removing sentence boundaries that were incorrectly identified. The problem is due to the line-oriented nature of text-difference utilities.

As a simplified example, Figure 1 shows what would happen in a side-by-side difference utility if a missing sentence break was added between two manual review steps. Imagine that “Sentence 1”, etc. represent full-length sentences, and that a manual review step inserted a missing sentence break between the first and second sentences. Difference utilities operate line by line, so “Sentence 3” on the left would be compared with “Sentence 2” on the right, while “Sentence 2” on the left would hang off the end of “Sentence 1” and not be compared with anything. It would only take a few differences between two steps of the corpus editing process to make it very difficult to visually compare the individual sentences.

Sentence 1. Sentence 2. |

Sentence 1. |

To avoid these problems, we chose to maintain the original paragraph lines and instead insert specialized markup at the end of each line to indicate the character offsets of the sentence ends. This and other markup were added to each line when required and could be edited with a text editor during the manual review process, maintaining the integrity of paragraphs in the source text while allowing the different steps of corpus preparation to be easily compared as text differences.

This format is also the only way to represent the edge case of sentence breaks with no space after a sentence’s final punctuation (attached sentences). Training a sentence splitter to detect these error cases in submitted documents could prove useful, generating results to correct these cases.

Markup was inserted at the beginning or the end of a paragraph line and consisted of one or more colon-separated fields with a colon at the start and the end. Titles and headers used markup at the start of a line, while sentence boundary positions used markup at the end of a line. Some examples are shown in Figures 2 to 7 below.

Unlike long paragraphs and single sentences, titles and headers are usually short and lack sentence-ending punctuation. Logically, we can consider a sentence corpus as continuous blocks of paragraphs interspersed by titles and headers. The paragraphs provide individual sentences, but we need to ignore intervening titles and headers that are generally not punctuated sentences.

We made this distinction by marking titles and headers using a simple heuristic: any line less than 50 characters long and without a sentence terminator was marked as a header to be excluded from the list of paragraphs. This is a good approximation because paragraphs are usually much longer and followed by sentence-ending punctuation. Once the potential titles and headers were marked, we reviewed them manually and changed the markup in the rare cases that marked text was actually a short paragraph (usually single sentences). Scanning the marked headers sequentially also highlighted the document structure, revealing longer headers that were not detected by the heuristic. Upon discovery during the manual review, these were also marked as headers.

Figure 2 shows a few section headers that were correctly detected by the heuristic. The markup :h1: indicates a header.

:h1: Introduction |

The last three lines in Figure 3 were marked as headers because of their length and the lack of sentence punctuation; however, they are actually short sentences. The manual review step removed the :h1: markup and added periods to terminate the sentences.

... I instead suggest something that follows this framework: |

Figure 4 shows the first title line of an article as an example of a long line that was assumed to be a paragraph. The manual review changed it to a header by adding :h1: markup to the start of the line. We did not distinguish between titles and headers in the markup: both are considered as non-sentences.

The Effects of Family Life Stress and Family Values on Marital Stability |

There were also some unusual line termination cases requiring handling. Normally, an extracted paragraph line ends in sentence-ending punctuation. However, it may end in sentence-internal punctuation, such as a comma, semi-colon, or colon. In such cases, we assume that the sentence continues on the next line, as shown by the first line of Figure 3.

A particularly difficult case is when an extracted non-header line has no final punctuation. This could be the end of a sentence that is missing its final punctuation or a sentence that was incorrectly split over two lines. Such issues require manual review to resolve. The process marks these cases in the corpus first and a manual reviewer resolves each one by either adding sentence-ending punctuation or inserting an indication that the sentence continues on the next line.

With the distinction made between paragraphs, titles, and headers, the remaining crucial step was to mark the sentence boundaries inside paragraphs. Any period at the end of a token that is not a number or an abbreviation is most likely a sentence break. The same applies to question marks and exclamation marks at the end of a token. The automatic process marks all of the above as sentence breaks, which covers most occurrences of these characters.

Figure 5 shows terminations marked as highly likely sentence breaks by the above logic. A sentence end position is marked by :EOL: followed by the column number.

... my view of the world. Through it, I have been exposed ... :EOL:160: |

Abbreviations, however, are a problem. They are usually sentence-internal (with periods that are not sentence breaks) but can also be sentence-final words. To identify these cases, all abbreviations in a text must first be located.

We started with the general abbreviation list described in Grefenstette and Tapanainen (1994), section 4.2.4, and then let the abbreviation discovery procedure of the NLTK Punkt training look for more. Punkt uses unsupervised training, so it can use extracted paragraphs directly. The abbreviation discovery process is far from perfect and can both over- and under-generate less frequent abbreviation candidates. As such, a manual review was required to get a solid list.

The process then marked all abbreviation positions in the corpus, allowing manual reviewers to view these positions. All abbreviations followed by capitalized words or numbers were then marked for human review to examine each one in context and decide if a sentence break was present. These ambiguous sentence endings required manual resolution. The remaining abbreviations (followed by lowercase words or punctuation) were not marked as sentence-ending because their context indicated that they were highly likely to be sentence-internal.

With this scheme, only a small fraction of the potential sentence breaks required manual verification (i.e., periods at the end of abbreviations followed by potential sentence-starting words). Figure 6 shows an abbreviation followed by a lowercase word, making it sentence-internal (not a sentence terminator). An abbreviation is marked with :ABBR: followed by the position of the start of the abbreviation and the position of the final period separated by a hyphen. Sentence-end markup was not added.

... employed at SnowValley Internet Inc. as a part-time software engineer ... :ABBR:134-137: |

Figure 7 shows two ambiguous sentence endings with capitalized words after the abbreviation. The first is a sentence break, while the second is not (i.e., it is sentence-internal) as determined by manual review. The ambiguity is communicated to the reviewer with the :E: markup. The reviewer changes the :E: to :EOL: if it is in fact a sentence break. After manual review, any remaining :E: markup was simply ignored.

... labor productivity with the U.S. It is also noticeable ... :ABBR:825-828:E:828: |

Once the manual review was finished, we had a list of paragraphs with all the internal sentence boundaries marked. Final sentence-ending punctuation at the end of a paragraph was considered a sure sentence break that did not require manual review. Therefore, when using the sentence corpus for testing sentence splitters, the sequence of paragraphs between each pair of titles or headers could be handled as a continuous block of text with all internal sentence boundaries marked.

To test the performance of a sentence splitter, we sent it each continuous block of text as a long text string. The sentence splitter then separated the blocks into sentences while the scoring program noted the character offset of each sentence end. These offsets were then compared with the offsets defined in the sentence corpus. Every discrepancy (sentence break in the corpus but not in the splitter result, or vice versa) counted as an error.

Trainable sentence splitters typically expect training files to contain one sentence per line with an empty line between paragraphs. This format is easily created by reading the sentence corpus file, outputting the sentence lines according to the markup, and adding a blank line at the paragraph breaks.

After the human review was finished, we started testing our candidate sentence splitters on the corpus, and the testing revealed some remaining corpus errors. If a sentence splitter disagreed with a sentence ending in the corpus, either the sentence splitter or the corpus was wrong. An examination of each error case showed when the latter was true, indicating necessary corpus fixes. The sentence splitters under evaluation also found different types of errors this way. We proceeded iteratively, applying the corpus fixes and then running the sentence splitters again until no more corpus errors were found.

Sentence Splitter Scoring Methodology

To evaluate a sentence splitter, we sent it each corpus text sequence (one or more paragraphs) and compared where it placed sentence breaks against the reference sentence break positions in the corpus.

A sentence splitter can make three kinds of mistakes:

- Type 1 (false positive) errors: detecting a sentence break that does not exist;

- Type 2 (false negative) errors: not detecting a sentence break; and

- Type 3 (sentence end) errors: correctly detecting a sentence break but not at the correct character position.

Type 3 errors occur when punctuation characters exist between the sentence-ending punctuation and the start of the next sentence, with the split being placed at the wrong punctuation position.

A few different definitions of Type 3 errors exist. We chose the definition that refers to getting the right answer to the wrong problem. We defined the wrong problem as a looser requirement for correctly detecting the presence of a sentence boundary but not at the final position of the first sentence. Detecting a sentence boundary at an approximate position would still be of some utility to the user of a sentence splitter, although not strictly speaking correct.

In Figure 8, the first sentence ends after the right parenthesis, before the white space that separates the sentences. A sentence break detected after the period would be one character to the left of the correct position, and a sentence break detected after the opening quotation mark would be two characters to the right of the correct position.

... end of sentence.) "Start of next one ... |

Each sentence splitter is graded using an error score (the sum of these three error types divided by the total number of possible sentence-ending positions in the corpus). The possible sentence-ending positions are as follows:

- every question mark and exclamation mark, except those inside a URL;

- the last period of an ellipsis; and

- every period at the end of a word or an abbreviation, but not internal periods of abbreviations.

Periods are not considered when they are part of number expressions, URLs, or constructs (such as part number identifiers). This error score is the one most often seen in the sentence splitter literature, albeit without Type 3 errors; those are usually considered correct responses.

A more intuitive metric might be simply counting the number of errors divided by the number of sentences. However, this would not be a valid metric because the number of errors could (in theory) be greater than the number of sentences, with one possible error for each sentence ending (except the last) plus one possible error for each sentence-internal abbreviation.

An alternative is recall, precision, and the combined F1 score, but this approach is less intuitive for problems such as sentence splitting. Recall is calculated using Type 2 errors and precision is calculated using Type 1 errors.

Sentence Splitters Evaluated

The systems listed in Table 1 were evaluated. All had Python 3 interfaces, although two of them are actually implemented in Java. The Python 3 interface method for the Java sentence splitters is indicated in Table 1’s Implementation column. Note that all implementations either have a self-contained tokenizer or are used in a processing pipeline where a tokenizer is called beforehand (Stanford CoreNLP and spaCy).

| Name | Methodology | Implementation | Training data | Reference |

|---|---|---|---|---|

| NLTK Punkt | Log likelihood collocation statistics and heuristics | Pure Python | WSJ news wire corpus | Kiss and Strunk (2006) |

| Stanford CoreNLP ssplit | Uses the output of a finite-state automa tokenizer, which includes end-of-sentence-indicators | Java localhost server | None | https://stanfordnlp.github.io/stanfordnlp/corenlp_client.html |

| Apache OpenNLP SentenceDetector | Word features with a maximum entropy classifier | Java with named pipes | WSJ news wire corpus | Reynar and Ratnaparkhi (1997) |

| spaCy Sentencizer | Rule-based | Pure Python | None | https://spacy.io/api/sentencizer |

| splitta NB | Word features with a Naive Bayes classifier | Python | Brown corpus and WSJ news wire corpus | Gillick (2009) |

| splitta SVM | Word features with an SVM classifier | Python, using a 3rd party SVM module | Brown corpus and WSJ news wire corpus | Gillick (2009) |

| Detector Morse | Word features with an averaged perceptron classifier | Python, using the nlup NLP package | WSJ news wire corpus | https://github.com/cslu-nlp/DetectorMorse |

| DeepSegment | BiLSTM driving a CRF classifier | Python and Tensorflow | Various sources | https://github.com/bedapudi6788/deepsegment |

- NLP: natural language processing

- WSJ: Wall Street Journal

- SVM: Support Vector Machine

- BiLSTM: bidirectional long short-term memory recurrent neural network

- CRF: conditional random field

Sentence Splitters with Tokenization Problems

Preliminary tests with the sentence corpus showed systematic problems with the handling of non-ASCII punctuation in three of the sentence splitters. The most glaring case was described briefly in the introduction as part of the Type 2 Punkt errors. To equalize all evaluated sentence splitters, we decided to fix these problems by adding the missing characters to their respective tokenizers.

The three sentence splitters (Punkt, splitta, and Detector Morse) were are all written in Python and included their own purpose-built tokenizer. The main role of a tokenizer is to separate words from punctuation. The main classes of punctuation are sentence-ending punctuation, sentence-internal punctuation, quotation marks, parentheses, and hyphens.

It was the mishandling of “smart” quotation marks, which are the opening and closing single and double quotation marks in the Unicode General Punctuation block, that caused most of the problems. This problem prevented all of the sentence splitters from detecting sentence endings when quotation marks occurred after a final period. Figure 9 illustrates this with two examples:

... to live “by the book.” They liked knowing ... |

In these cases, a single or double quotation mark remained attached to the period, which was then not recognized as the last character of the word (and hence not the end of the sentence). It was quite surprising to see this kind of problem in currently available packages. This lack of character handling may reflect the academic origin of these systems; they were all trained on the venerable Brown and/or WSJ corpora, which were both developed before Unicode came into use.

The Brown corpus was developed in 1964 and revised in 1979; see Francis and Kucera (1979). The first version of the WSJ corpus was built from Wall Street Journal articles over three years, from 1987-1989: see Paul and Baker (1992). The Unicode 1.0 standard was published in 1992; see https://www.unicode.org/history/versionone.html.

There were also some hyphens in the general punctuation block that required special handling. Long hyphens, such as em dashes or horizontal bars, usually separate words and require handling as separate tokens. The ASCII dash, however, is usually treated as word-internal.

A final problem occurred with ellipses consisting of three or more evenly-spaced periods. This occurred with Punkt and splitta, although not with Detector Morse. This type of ellipsis sometimes caused spurious sentence breaks after each period. The example in Figure 10 caused the two splitters to detect three adjacent sentence breaks after each period:

... culture. We need to create . . . contemporary design[s] that ... |

Tokenizers typically start by separating text at spaces and then split off the punctuation from the resulting words. An ellipsis of three or more evenly spaced periods confounds this handling as the entire pattern of periods and spaces must be considered as a single token.

Adaptations for Punkt

Fixing these Punkt tokenizer problems reduced the error rate by 1.3% (first two rows in Table 2). It is possible to provide an abbreviation list to Punkt, so we did two experiments with them. The third row is the original code with the basic abbreviation list used by the Scribendi Accelerator: the abbreviations alone reduced the error rate by 2.6%. The fourth row is the code with tokenizer fixes and the full corpus abbreviation list. In this case, the abbreviations alone reduced the error rate by 3.6%. The tokenizer fixes made a measurable improvement to the error rate, and improving the abbreviation coverage had an even larger effect.

| Punkt updates | error |

|---|---|

| Original | 5.97% |

| With tokenizer fixes, no abbreviation list | 4.67% |

| Original with basic abbreviation list | 3.41% |

| With tokenizer fixes and the corpus abbreviation list | 1.09% |

Adaptations for splitta

Fixing the splitta tokenizer reduced the error rate by over 1% (see Table 3). splitta actually has two models: a Naive Bayes classifier and a support vector machine classifier. There is a common infrastructure for both that internally calls the specific training and run-time for the model as specified in the calling parameters.

splitta is also the only sentence splitter implemented in Python 2; the others are implemented in Python 3. We updated the splitta run-time and training procedure to Python 3 using the 2to3 utility. This utility is installed by default with the Python interpreter on Linux and is available on the command line. It is also located in the Tools/scripts directory of the Python root.

After the conversion, there were only two minor problems remaining to get the Python 3 version working:

- The original code used a compare function in the built-in

sort()method. This is not supported in Python 3, but the default behavior in Python 3 gives the same result. - The original code invoked a command line operation to load the compressed model files, which did not work in Python 3. We replaced this using the built-in gzip module, which was commented out in the original code.

The training mode of splitta also did not work. On analyzing the source code, we discovered that the training procedure expected a markup code <S> at the end of each sentence. There is a hint of this in Gillick (2009), which talks about classifying period-terminated words as “S”, “A”, or “A+S”.

Instead of creating a special case by adding <S> to each line of the sentence-per-line corpus training data, we modified the training code to recognize line ends in the training data and take the same action triggered by the <S> markup.

splitta is the only sentence splitter we evaluated that completely ignores question marks and exclamation points, which increased its error rate. Again, with the goal of putting it on a level playing field with the others, we added a post-processing step to apply the simplest possible handling: always insert a sentence boundary after these two characters.

Later on, we added logic to the handling of question marks and exclamation points to detect when they were ends of embedded sentence fragments and not sentence ends. The logic is identical to that described in the section “Question Marks and Exclamation Points” under the Punkt improvements below. This resulted in a small improvement, as shown in the last row of Table 3.

| splitta updates | NB error | SVM error |

|---|---|---|

| Original converted to Python 3 (does not split on “?” and “!”) |

4.26% | 4.29% |

| After adding basic splitting on “?” and “!” | 2.20% | 2.23% |

| After fixing the tokenizer | 0.96% | 1.10% |

| Improved “?” and “!” detection | 0.92% | 1.06% |

- NB: using the Naive Bayes classifier

- SVM: using the support vector machine classifier

Adaptations for Detector Morse

Fixing the Detector Morse tokenizer reduced the error rate by 1.41%, going from 4.52% in the original implementation to 3.11%.

Untrained Sentence Splitter Results

Table 4 shows the results for each sentence splitter on the sentence corpus, using its default training data. The “level playing field” fixes made to Punkt, splitta, and Detector Morse are also included (with the exception of the first row). The timings were done on an Intel Core i3-2350M CPU @ 2.30Ghz (dual core).

| Name | Error rate | Type 1 errors | Type 2 errors | Type 3 errors | Time per 1,000 sentences |

|---|---|---|---|---|---|

| NLTK Punkt as used by the Accelerator: no tokenization fixes and basic abbreviation list | 3.41% | 1.46% | 1.94% | 0.01% | 0.23 sec. |

| NLTK Punkt, after fixing tokenization, using corpus abbreviations | 1.09% | 0.36% | 0.71% | 0.01% | 0.24 sec. |

| Stanford CoreNLP ssplit | 1.41% | 1.09% | 0.30% | 0.02% | 1.02 sec. |

| Apache OpenNLP SentenceDetector | 2.81% | 1.32% | 1.49% | 0.00% | 0.23 sec. |

| spaCy Sentencizer | 8.68% | 6.18% | 0.62% | 1.87% | 0.57 sec. |

| splitta NB | 0.92% | 0.49% | 0.43% | 0.00% | 0.55 sec. |

| splitta SVM | 1.06% | 0.29% | 0.77% | 0.00% | 3.54 sec. |

| Detector Morse | 3.11% | 2.01% | 1.10% | 0.00% | 0.54 sec |

| DeepSegment | 31.86% | 10.06 | 21.79% | 0.01% | 37.88 sec. (no GPU) |

The top four results for the untrained sentence splitters are as follows. The error rates for the others are all more than 1% higher.

- splitta NB: 0.92%

- splitta SVM: 1.06%

- Punkt: 1.09%

- Standford CoreNLP: 1.41%

The results for Punkt could be considered cheating if supplying an abbreviation list is considered using pre-trained data. However, no other sentence splitter evaluated can accept a pre-defined abbreviation list. Apache OpenNLP does accept an abbreviation list, but only as part of the training process.

Experiments with Trainable Sentence Splitters

Four of the sentence splitters can be trained on sentence data, so we performed a second test on those (Table 5), training them on the sentence corpus. As the sentence splitters were also being evaluated on the sentence corpus, we used five-fold cross validation.

| Sentence splitter | Training type |

|---|---|

| Punkt | Unsupervised |

| splitta | Supervised |

| Detector Morse | Supervised |

| Apache OpenNLP | Supervised |

Improvements to Punkt

Aside from the tokenization issues, we made a few more changes to Punkt to improve the results. This section details the most significant changes.

Unsupervised Training Procedure

Punkt is unique in that it uses unsupervised training. It can train on arbitrarily long texts with no indication of where the sentence boundaries are, so no corpus preparation is necessary. However, any titles and headers should, ideally, be removed, as we did while generating the sentence corpus. Such removal prevents headers from running into the start of the next paragraph with no sentence break. Titles also tend to be over-capitalized, which could adversely affect the word statistics.

The training process needs to know where the sentence boundaries are in free-flowing text to learn how to detect them. This chicken-and-egg problem is solved by generating an abbreviation list first. The training process uses a heuristic to detect abbreviations as short strings that occur more often with a final period than would be expected if they only occurred as sentence-final words. With the list ready, the training process can then assume that any word followed by a period that is not an abbreviation is actually a sentence ending. It then learns the sentence boundary statistics from these cases.

The abbreviation discovery process can make mistakes by over- or under-generating abbreviations, negatively affecting the training data and performance. It is possible to preload a list of known abbreviations before training to reduce these errors. However, this mechanism is not well integrated into Punkt, and the abbreviation heuristic can remove entries in the preloaded list if they do not pass the heuristic tests during training. So preloaded abbreviations can silently disappear.

We made the abbreviation handling more robust by defining the preloaded abbreviations as fixed, unable to be removed during training. We also added an abbreviation stop list consisting of over-generated abbreviations that were observed to be incorrect. These two abbreviation lists allowed complete control of abbreviation definitions, with the ultimate quality depending on the amount of manual review invested in the process. When evaluation of the trained Punkt results showed errors caused by either over- or under-generated abbreviations, each culprit could be added to the applicable list and the training process rerun.

Using Word Frequency Counts

The Punkt training process builds word lists with yes/no flags for each word, indicating if words were seen at least once as capitalized or lowercase as well as in sentence-start or sentence-internal positions. However, this leads to very strict heuristics, such as “never seen as capitalized in a sentence-internal position.” We changed these lists to use word counts instead of binary flags and refined the heuristics to use logic like “the majority of the sentence-internal occurrences are lowercase.”

Question Marks and Exclamation Points

Most of the logic in Punkt revolves around periods and abbreviations, while question marks and exclamation points are simply considered as sentence breaks. This creates errors in the case of embedded sentences. Figure 11 shows three examples.

"Where did she go?" he said. |

We added a simple heuristic to improve the results: do not insert a sentence break after a question mark or exclamation point if it is followed by a lowercase word or sentence-internal punctuation, after skipping quotes or parentheses.

Special Handling of Some Abbreviations

We added special handling of “Mr.”, “Mrs.”, “Ms.”, and “Dr.”, so they were not considered sentence breaks.

Several other abbreviations could benefit from special case rules, but we did not experiment further in this direction. For example:

- “p.” and “pp.” are not sentence-ending if followed by a number

- “fig.” and “no.” are only abbreviations if followed by a number (otherwise they are sentence-ending words)

- “in.” and “am.” are only abbreviations if preceded by a number (otherwise they are sentence-ending words)

Abbreviations and Apache OpenNLP

Apache OpenNLP can train using a pre-defined abbreviation file in addition to the sentence files. We tried training both with and without an abbreviation list.

If an abbreviation file is not provided, the trainer takes all period-terminated words inside a sentence as abbreviations, which will catch all abbreviations except those at the ends of sentences.

We trained both with and without the abbreviation list extracted while building the sentence corpus. The results were better when using the abbreviation list (see Table 6).

Trained Sentence Splitter Results

The first row of Table 6 shows the results of Punkt with the improvements described above along with the general abbreviation list defined in Grefenstette and Tapanainen (1994), section 4.2.4. The second row uses the abbreviation list extracted while building the sentence corpus, showing how using a high-coverage abbreviation list can improve the results.

| Sentence splitter | Error rate | Type 1 errors | Type 2 errors | Type 3 errors |

|---|---|---|---|---|

| NLTK Punkt with code improvements, using general abbreviation list | 0.94% | 0.73% | 0.20% | 0.01% |

| NLTK Punkt with code improvements, using corpus abbreviations | 0.58% | 0.35% | 0.22% | 0.01% |

| splitta NB | 0.97% | 0.72% | 0.26% | 0.00% |

| splitta SVM | 0.68% | 0.34% | 0.34% | 0.00% |

| Detector Morse | 0.71% | 0.32% | 0.39% | 0.00% |

| Apache OpenNLP, no abbreviation list | 1.39% | 0.83% | 0.56% | 0.00% |

| Apache OpenNLP, using corpus abbreviations | 1.08% | 0.52% | 0.56% | 0.00% |

| Stanford CoreNLP | 1.41% | 1.09% | 0.30% | 0.02% |

The Stanford CoreNLP results are stated here in comparison with the top five results.

- Punkt, using corpus abbreviations: 0.58%

- splitta SVM: 0.68%

- Detector Morse: 0.71%

- splitta NB: 0.97%

- Apache OpenNLP, using corpus abbreviations: 1.08%

- Stanford CoreNLP: 1.41%

Interestingly, non-trainable Stanford CoreNLP has a Type 2 error rate of 0.30%, which is better than four of the trained splitter results. Aside from splitta NB, the trained results are all improvements over the untrained results.

Combining Sentence Splitters

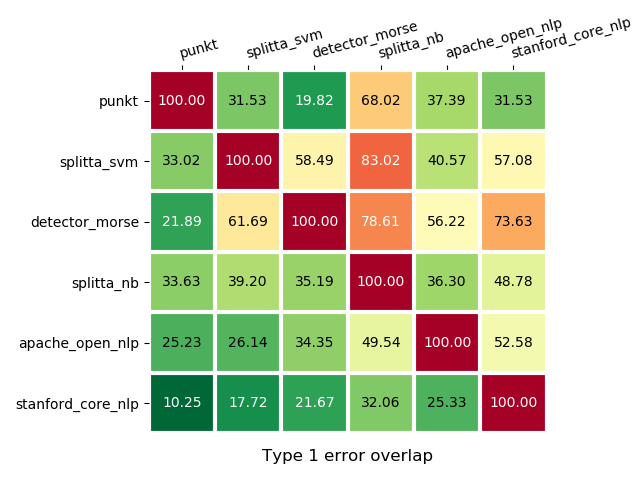

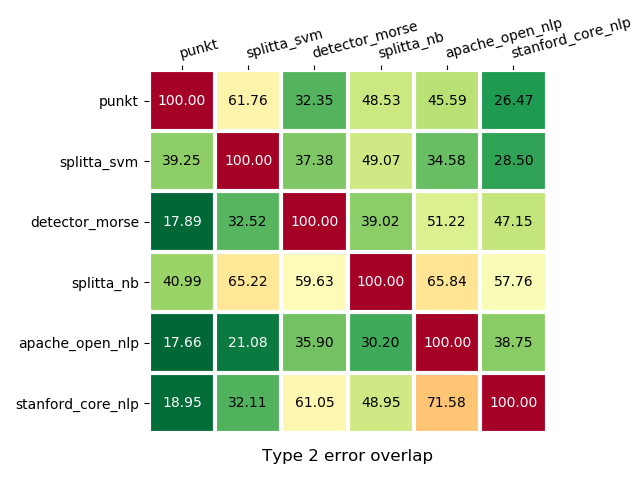

It may be possible to combine multiple sentence splitter results to give a result superior to any of the component parts (ensemble method). As a preliminary investigation of this idea, we looked for any overlap between the error results of the top six sentence splitters listed above (see Tables 7 and 8).

Tables 7 and 8 are row-oriented in the sense that the row for a given sentence splitter shows the overlap percentage of its errors with respect to each of the sentence splitters in the columns. For example, splitta NB has approximately twice as many Type 1 errors as splitta SVM (Table 6). This means that roughly half of the splitta NB errors cannot be in common with splitta SVM. The overlap in the splitta NB row/splitta SVM column is 39.2% in Table 7. The opposite (splitta SVM row/splitta NB column) is 83.02% because the splitta SVM errors have a higher probability of overlapping with the larger number of splitta NB errors.

Ensembling Methods

Combining sentence splitters falls under the stacking ensembling method. This technique consists of running multiple sentence splitters independently and then creating a separate classifier to combine the results, with the goal of achieving a lower error rate than any of the component sentence splitters. The method name comes from the idea that the new classifier is stacked on top of the existing ones.

There are several means of creating a stacked classifier, listed here starting with the simplest and increasing by complexity:

- Judicious combination of sentence splitters, exploiting the overlaps in Type 1 and Type 2 error results

- Majority voting among three or more sentence splitters

- Logistic regression based on individual sentence splitter results (yes or no sentence split decisions)

- Logistic regression based on confidence values of the individual sentence splitter results, possibly with other features

- A neural network based on the confidence values of the individual sentence splitter results, possibly with other features

We did not pursue investigations of ensembling methods further, deciding to leave this for a future project.

Comparison with the Grammarly Blog Post “How to Split Sentences”

Grammarly has published a blog post on their site highlighting problems in sentence splitting. They tested seven sentence splitters on two different corpora; their post can be viewed here: https://www.grammarly.com/blog/engineering/how-to-split-sentences/.

There were four common sentence splitters used in the Grammarly test and in this one, and, for the most part, these were the best performers in both evaluations. The common sentence splitters are as follows:

- NLTK Punkt

- splitta

- Apache OpenNLP

- Stanford CoreNLP

Grammarly tested unmodified sentence splitters on two different corpora: OntoNotes 4 and MASC. The MASC corpus contains a considerable number of mistakes, and the final statistics published in the blog post are for OntoNotes corpus only. Grammarly also trained the OpenNLP splitter using a 70% training/30% testing split on the OntoNotes corpus. Finally, they applied some post-processing heuristics to the OpenNLP results.

Grammarly used a slightly different scoring method from ours. They counted the number of sentences not split correctly, while we counted the number of errors over all potential sentence-end positions (both sentence-internal and sentence-ending).

We also counted splitting at incorrect character positions as an error (Type 3 errors), while Grammarly did not consider these errors. However, these errors were so infrequent in our results that we can ignore the difference.

The Grammarly method was not able to take into account the (admittedly rare) case of more than one error in the same sentence; one error or more always counted as one. The difference between the two methods is the denominator used in the error calculation. Grammarly divided by the number of sentences, whereas we divided by the number of potential sentence-end positions. The latter is always a bigger number, assuming that there are at least some sentence-internal abbreviations.

Since we used a larger denominator, our error percentages on the same sentence splitting results were generally smaller. Therefore, to compare the Grammarly results with our own, we compensated by multiplying our error rate by the number of potential sentence-ending positions in the corpus divided by the number of sentences, producing the results shown in Table 9. This makes them compatible with the Grammarly results shown in Table 10.

Scribendi Results for the Common Sentence Splitters

The common sentence splitters, aside from CoreNLP, were trained and tested on the Scribendi sentence corpus. CoreNLP is not trainable, nor can it use an external abbreviation list. Evaluation after training used five-fold cross validation. Errors have been normalized to count errors per sentence. Both Punkt and splitta have problems with Unicode punctuation handling, which we fixed.

| Sentence splitter | Out of the box errors | Errors after fixing tokenization | Errors after training |

|---|---|---|---|

| NLTK Punkt | 7.03% | 1.28% (using corpus abbreviation list) |

0.68% (corpus abbreviations and improved heuristics) |

| splitta SVM | 5.05% | 1.25% (also added splitting on ? and !) |

0.80% |

| Apache OpenNLP | 3.31% | 1.64% | |

| Apache OpenNLP, trained using the corpus abbreviation list | 1.27% | ||

| Stanford CoreNLP | 1.66% |

Grammarly Results for the Common Sentence Splitters

The OpenNLP sentence splitter was trained on the OntoNotes 4 corpus using a 70% training/30% testing split.

| Sentence splitter | Out of the box errors | Errors after training | Errors after adding post-processing heuristics |

|---|---|---|---|

| NLTK Punkt | 2.9% | ||

| splitta | 7.7% | ||

| Apache OpenNLP | 2.6% | 1.8% | 1.6% |

| Stanford CoreNLP | 1.7% |

Comparing the Results

The results are not directly comparable because they are based on different corpora as well as different versions of the sentence splitters. The Grammarly blog post dates from 2014, and most of the sentence splitters they tested were earlier versions than those available today. Most of the error rates are within 3% of each other. In particular, the OpenNLP and CoreNLP results were quite close, highlighted in red in the corresponding rows of Table 9 and Table 10. splitta does not split sentences on question marks and exclamation points, which explains its high initial error rate in column 1 of both evaluations.

While Grammarly experimented with training one sentence splitter and then adding heuristics, we went further by training all the sentence splitters that were trainable, modifying source code to fix tokenization problems and improve heuristics, and using high-coverage abbreviation lists. Instead of relying on external corpora, we developed our own corpus from samples of Scribendi-edited documents. This required extracting a high-coverage abbreviation list that we also used to improve the performance of Punkt and Apache OpenNLP. For OpenNLP, training with the abbreviation list reduced the error rate by 23% (Table 9). The abbreviation list plus source code changes made a large improvement in Punkt, as shown in Tables 2 and 6.

The result is that we obtained errors of under 1% (using Grammarly’s error metric) with two of the common sentence splitters: Punkt and splitta. By design, splitta does not use abbreviation lists, so we added post-processing to detect sentence boundaries on question marks and exclamation points.

Conclusion

The goal of this project was to achieve a sentence splitter error rate of less than 1% on Scribendi-edited data. We achieved this result with three of the eight sentence splitters evaluated: Punkt, splitta and Detector Morse. To reach this level, it was necessary to train the sentence splitters on domain data. We also made source code modifications to fix tokenization problems and improve heuristics.

For training and testing, we developed a corpus made of samples of Scribendi-edited data because they represent the documents used with the Accelerator. Building our own corpus also allowed us to maintain control over the quality. We consider the corpus to have a wide domain coverage because of the large variety of documents in the database. In practice, any SBD application will be used in a certain context, and the way to maximize sentence splitter performance is to train on a sufficiently wide sample of representative text.

In creating the corpus, we realized that we needed an abbreviation list because the final period of abbreviations represented the most ambiguous of the potential sentence-ending positions. This meant that the abbreviation positions were where most of the manual review of the sentence corpus would take place. Fortunately, the Punkt sentence splitter has an automatic abbreviation detection heuristic, which we used for this purpose. However, since it was generated automatically, the abbreviation list itself also required manual review in order to remove falsely-generated entries and add missing ones.

Punkt and OpenNLP can accept external abbreviation lists, and we experimented with the corpus abbreviation list as well as two more generic lists. Punkt showed strong improvement when supplied with external abbreviation lists, as shown in Table 2. Unsurprisingly, using the full corpus abbreviation list gives the greatest performance boost. Our abbreviation list is probably domain-specific to some extent. One notable domain-specific class is abbreviations used in journal names in academic citations (“Syst.”, “Softw.”, “Exp.”, “Opthalmol.”, etc.).

The closeness of the top scores is interesting because the sentence splitters use complementary technologies. Punkt uses unsupervised learning with word statistics and collocation heuristics, while splitta and Detector Morse use supervised learning with word features and classic classification techniques.

Punkt benefits from a manually verified abbreviation list but only needs free-flowing paragraphs of sentences for training. Very large training datasets can, therefore, be generated fairly easily. We did, however, find that extracting a high-coverage abbreviation list, required for top performance, is a labor-intensive process.

splitta and Detector Morse do not use abbreviation lists but require extensive training corpus preparation, in which all sentence boundaries must be marked. This is also a labor-intensive process, which tends to limit the size of the training data.

References

Francis, W. N. and H. Kucera, “BROWN CORPUS MANUAL: Manual of Information to Accompany a Standard Corpus of Present-Day Edited American English for Use with Digital Computers.” (1979). http://icame.uib.no/brown/bcm.html

Gillick, D. “Sentence Boundary Detection and the Problem with the U.S.” In Proceedings of Human Language Technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Companion Volume: Short Papers (2009): 241–244. https://www.aclweb.org/anthology/N09-2061.pdf

Grefenstette, G. and P. Tapanainen, “What is a Word, What is a Sentence? Problems of Tokenization.” In Proceedings of the 3rd Conference on Computational Lexicography and Text Research, Budapest, Hungary (1994): 79–87. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.28.5162&rep=rep1&type=pdf

Kiss, T. and J. Strunk. “Unsupervised Multilingual Sentence Boundary Detection.” Computational Linguistics 32, no. 4 (2006): 485–525. https://www.aclweb.org/anthology/J06-4003.pdf

Koehn, P. 2005. “Europarl: A Parallel Corpus for Statistical Machine Translation.” In Conference Proceedings: the Tenth Machine Translation Summit, Phuket, Thailand, AAMT (2005): 79-86. https://homepages.inf.ed.ac.uk/pkoehn/publications/europarl-mtsummit05.pdf

Mikheev, A. “Periods, Capitalized Words, etc.” Computational Linguistics, 28, no. 3 (2002): 289–318. https://www.aclweb.org/anthology/J02-3002.pdf

Paul, D. B. and J. M. Baker. “The design for the Wall Street Journal-based CSR corpus.” Proceedings IEEE International Conference on Acoustics, Speech and Signal Processing, Banff (1992): 899-902. https://www.aclweb.org/anthology/H92-1073.pdf

Read, J., R. Dridan, S. Oepen, and J. Soldberg, “Sentence Boundary Detection: A Long Solved Problem?” In Proceedings of COLING (2012): 985-994. https://www.aclweb.org/anthology/C12-2096.pdf

Reynar, J. and A. Ratnaparkhi, “A Maximum Entropy Approach to Identifying Sentence Boundaries.” In Proceedings of the 5th Conference on Applied Natural Language Processing (1997): 16–19. https://www.aclweb.org/anthology/A97-1004.pdf