Written by: Daniel Ruten

Grammatical error correction (GEC) tools that rely on methods of natural language processing (NLP) have a fairly long history. Recent advances in AI and machine learning have greatly improved the methods and fidelity of these tools in recognizing the patterns of written language. As a result, such tools have become useful not only for novice writers and non-native English speakers but also for experienced writers and even professional editors.

Many GEC tools are now available. Some basic tools are included as a built-in function of word processors (e.g., Microsoft Word and Google Docs), while more sophisticated standalone tools (e.g., Grammarly, Antidote, and the Scribendi Toolbar) incorporate machine learning methods to improve their versatility and usefulness. To date, however, few of these tools have employed deep learning—an advanced subfield of machine learning that uses algorithms considered to mimic the workings of the human brain. This is primarily due to the large annotated datasets that are required to conduct supervised learning with artificial neural networks. These neural networks enable a method of grammar correction that, instead of being rule-based, more accurately reflects the subtle nuances of human language and is able to continually learn and improve itself.

Introducing the Scribendi Accelerator

The editing and proofreading company Scribendi has developed a new GEC tool geared toward professional editors called the Accelerator. This tool relies on deep learning and is based on a dataset of more than 30 million sentences annotated by professional editors. As part of the Scribendi Toolbar, an add-on for Microsoft Word, the Accelerator is designed to help editors improve their workflow and editing speed by correcting errors and inconsistencies in English text, as well as providing suggestions for further writing improvements. It can be especially useful to run on edited text as a final measure to catch any remaining errors or inconsistencies.

This post will compare the performance of the Scribendi Accelerator on a sample text to that of other major GEC tools in order to evaluate its efficacy. The first section will explain the methodology used in this evaluation. The second will provide the results of the quantitative and qualitative evaluation on six GEC tools: the Scribendi Accelerator, Grammarly, Ginger, AuthorOne, Antidote, and ProWritingAid. The following section will discuss how the Accelerator’s performance compares to that of its peers in both quantitative and qualitative terms. Then, the post will conclude by summarizing the overall evaluation and the significance of the results.

Methodology

This evaluation uses simple formulas and rubrics to determine the effectiveness and value of a GEC tool to a user. The first and most important component of this method is termed the Core Evaluation, which measures the efficacy of a GEC tool in correcting clear errors. The second component is termed the Supplementary Evaluation, which measures the value a GEC tool offers by suggesting non-essential writing improvements. The final component of this method is the Qualitative Assessment, which uses a rubric to evaluate the state of the text after incorporating the changes suggested by the GEC tool. These different aspects are combined to holistically understand the value that a given GEC tool provides to a user.

Unlike most current automated evaluation methods, this method does not rely on a “gold standard” approach that checks whether a single correction was applied by a given tool word-for-word. Such an approach relies on the assumption that there is only one correct way to revise an error in a given text. However, this does not adequately reflect the nuances of natural language and the task of editing, as grammatical errors can typically be resolved in multiple, equally valid ways. Instead, this method is based on that of a previous evaluation of the Accelerator and is designed to take into account the subjective aspects of language and grammatical error correction.

The sample text used in this evaluation consists of a short undergraduate assignment written by the author (with various errors introduced), combined with seven sentences from the public National University of Singapore Learner Corpus dataset. In total, the document is 516 words long.

1. Core Evaluation

The first component of the evaluation is intended to measure the primary function offered by GEC tools: the correction of clear grammatical errors. It is expressed by the following formula:

Core Value (CV) = A – (B + C)

where A represents the number of grammatical errors corrected by the GEC tool, B represents the number of errors introduced into the text by the tool, and C represents the number of errors that the tool identified but provided incorrect solutions for.

Spelling errors are not included in the A category since such basic error corrections are already offered by default in most word processors. Capitalization errors are included, however, primarily to evaluate GEC tools’ performance in contextually recognizing proper nouns.

This formula implies that when a GEC tool identifies an error but offers an incorrect solution, the negative effect on the user experience is roughly equal to instances in which it introduces an error into correct text. This is partly because an incorrect solution may mislead a user into thinking that the error has been resolved.

2. Supplementary Evaluation

This part of the evaluation seeks to measure the supplemental value of GEC tools in offering nonessential improvements to writing. It is expressed in the following formula:

Supplementary Value (SV) = D – E

where D represents the number of improvements suggested (i.e., changes that are not necessary but are considered to improve the text) and E represents the number of unnecessary or “neutral” changes (i.e., changes to the text that are unnecessary but not incorrect—AND are not considered to improve the text).

Improvements include changes that make the text more concise (e.g., removing unnecessary articles and filler words) or make phrasing more direct (e.g., changing “for automatic detection of” to “to automatically detect”); changes that improve readability (e.g., a comma after “therefore” or hyphenation of certain compound words/adjectives); and changes in wording that more closely adhere to common usage.

Neutral changes include changes whose suitability depends on the dialect of English or style guide being used (e.g., serial commas or commas after e.g. and i.e.); rewording that does not have a discernible rationale or positive effect on the text BUT does not change the meaning; and changes suggested to specialized terminology (context-dependent).

The way this supplemental value is calculated assumes that neutral/unnecessary changes to the text detract from other helpful suggestions—primarily because of the additional time cost for the user to review and possibly ignore or reverse unnecessary changes. This implies that users of the tool will only consider additional improvements worth the time and effort it takes to use the tool if these improvements are not accompanied by unnecessary and unhelpful revisions to their writing. Consideration of these factors can more holistically compare the value that GEC tools offer their users, especially considering that some prominent GEC tools advertise an ability to significantly improve their users’ writing.

3. Additional Qualitative Assessment

Finally, a short qualitative assessment looks at the overall sample text before and after incorporating the GEC tools’ suggestions to consider the corrections in context. The following rubric guides this assessment:

a) Were corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

c) Are “neutral”/unnecessary changes applied consistently throughout the document?

d) Do any changes negatively affect the readability or flow in the wider context?

Results

Table 1 below shows the results of the core and supplemental evaluations. In the attached spreadsheet, the errors corrected by each tool are also categorized according to the authoritative Chicago Manual of Style (17th edition). As can be seen, the Scribendi Accelerator 2.3.0.9 had the highest score in the core evaluation, followed by Grammarly (c. April 2020) and Antidote (c. October 2020). In the supplemental evaluation, the Accelerator again achieved the highest score, followed by AuthorOne (April 2020) and then Grammarly (April 2020). Notably, the Accelerator only offered one incorrect solution and was the only tool that did not introduce any errors or unnecessary changes into the text. The number of errors corrected was also higher than with any other tool tested.

Table 1

GEC tool evaluation results. The dates in parentheses indicate approximately when each tool was tested (where no version numbers were available).

| GEC Tool | A (Errors Corrected) | B (Errors Introduced) | C (Incorrect Solutions) | D (Improvements Suggested) | E (Neutral Changes) | Core Evaluation Total | Supplementary Evaluation Total |

| Grammarly (April 2020) | 21 | 1 | 4 | 7 | 6 | 16 | 1 |

| ProWritingAid (March 2020) | 15 | 3 | 4 | 9 | 12 | 8 | -3 |

| AuthorOne (April 2020) | 7 | 1 | 0 | 6 | 2 | 6 | 4 |

| Scribendi Accelerator 2.3.0.9 | 26 | 0 | 1 | 7 | 0 | 25 | 7 |

| Ginger (September 2020) | 9 | 2 | 1 | 3 | 0 | 6 | 3 |

| Antidote (October 2020) | 19 | 2 | 2 | 12 | 13 | 15 | -1 |

The summaries and qualitative assessments of the tools are as follows.

Grammarly (April 2020)

Test summary

Grammarly outperformed most tools in the core evaluation (final score 16) and was only outperformed by the Accelerator (final score 25). It corrected the second-highest number of errors.

Besides the correction of clear errors, Grammarly’s improvements to the writing slightly outweigh unhelpful suggestions, though a significant number of unnecessary changes were made. In this regard, it was outperformed by both the Accelerator and AuthorOne, even though it quantitatively suggested a higher number of improvements than either of these tools.

Final text summary

In viewing the final text, it becomes apparent that Grammarly corrected several errors that most competitors did not (e.g., changing “monster among monster” to the plural “monsters”). However, its changes were not always applied consistently, and multiple unnecessary changes somewhat altered the tone of the text somewhat. It also suggested some rewordings based on how commonly a word was used, which risked hindering some of the distinctiveness of the writing. Other rewordings, however, notably improved the tone and conciseness of the writing. Its qualitative performance is exceeded only by the Accelerator.

Qualitative assessment

a) Were corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

Corrections to punctuation varied; some offered incorrect solutions while other errors were glossed over. It did perform well at consistently applying parenthetical commas and resolving subject/verb agreement errors.

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

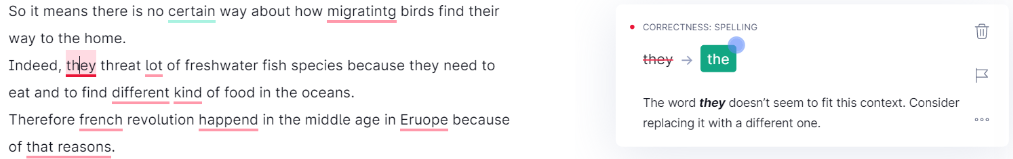

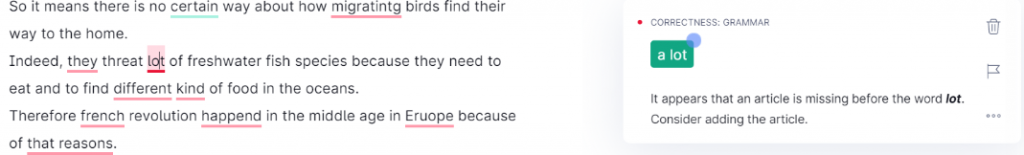

Some suggestions significantly changed the meaning in the context of the sentence or exacerbated syntax issues (e.g., “they threat lot of freshwater fish species” was changed to “the threat lot of freshwater fish species”). Changes were also made to text within quotation marks, which is not advisable.

c) Are “neutral”/unnecessary changes applied consistently?

Yes.

d) Do any changes negatively affect the readability or flow in the wider context?

A few changes negatively affected flow; for instance, every instance of the word “own” was deleted (e.g., “his own [x]”).

AuthorOne (April 2020)

Test summary

AuthorOne corrected the fewest errors of all the GEC tools. However, it introduced only one error and, unlike all the other tools tested, did not offer any incorrect solutions. In the core evaluation, it is tied with Ginger with a score of 6.

It did relatively well in the supplementary evaluation with a final score (4) second only to the Accelerator (7). This illustrates that although AuthorOne corrects fewer errors overall compared to similar tools, its recommendations can generally be trusted by the user.

Final text summary

On review of the final text, it became apparent that the corrections made, while helpful, were fairly sparse, and many issues were retained compared to the end results of competitors. Additionally, one of the “neutral” changes significantly altered the meaning of the sentence.

Qualitative assessment

a) Were corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

AuthorOne performed relatively well in resolving the incorrect punctuation of some independent and non-restrictive clauses. It was less consistent in correcting other punctuation errors; for instance, it resolved one apostrophe error but retained others.

AuthorOne was somewhat inconsistent in other respects as well. It was able to detect and correct basic agreement issues when the subject and verb were very close to each other; however, it missed agreement errors when the subject and verb were separated by multiple words.

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

One rewording significantly changed the meaning of a sentence because it assumed a word was misused (“defer” was changed to “acknowledge”). Additionally, a change was recommended for a term in quotation marks, which is unhelpful. Finally, in one sentence a necessary definite article was deleted; this last instance was the only clear error introduced into the text.

c) Are “neutral”/unnecessary changes applied consistently?

The unnecessary changes were not of the type that required consistency.

d) Do any changes negatively affect the readability or flow in the wider context?

The only changes that negatively affected readability in this fashion were the instances of rewording and definite article deletion discussed above.

ProWritingAid (March 2020)

Test summary

ProWritingAid had middling performance compared to its peers in both the core evaluation (final score 8) and the supplementary evaluation (9/12, final score -3). It did well at framing additional revisions as suggestions that can be ignored by the user, but unhelpful suggestions tended to slightly outweigh helpful ones in the test.

Final text summary

The text is in better shape than before, though many subject/verb agreement errors and article errors were retained in the final text. Some of the unnecessary changes the tool suggested negatively affected the writing in the final document.

Qualitative assessment

a) Were corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

ProWritingAid corrected one subject/verb agreement error but missed others. It performed similarly with errors involving definite or indefinite articles. It performed somewhat more consistently in resolving punctuation issues, though some errors were still retained.

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

There were multiple instances where the changes made significantly hindered readability. In one case, a major syntax error was introduced: “The full extent of his monstrosity is revealed once Odysseusand his men meet him directly” in the original text was changed to “It reveals once the full extent of his monstrosity Odysseus and his men meet him directly.” At other points, the meaning was altered significantly by incorrect error solutions (e.g., changing “threat” to “treat”).

A suggested change from the passive voice to the first person also resulted in awkward phrasing that is very unusual for academic writing: “this is seen in Odysseus’ heroin actions” was changed to “I see this in Odysseus’ heroin actions.”

Changes were additionally suggested to text within quotation marks, which should not be edited.

c) Are “neutral”/unnecessary changes applied consistently?

Unnecessary changes were applied consistently regardless of context or suitability. For instance, the tool suggested that almost every single adverb in the text be deleted. This cumulatively resulted in the writing becoming less descriptive and flavorful.

d) Do any changes negatively affect the readability or flow in the wider context?

Multiple errors were introduced that negatively affected readability (for instance, the syntax error discussed earlier). Other changes made the text more concise but also significantly hindered its descriptiveness (see the indiscriminate deletion of adverbs discussed above).

Ginger (September 2020)

Test summary

Ginger somewhat underperformed compared to most of its peers in the test. In the core evaluation, it tied with AuthorOne with a score of 6. It did better in the supplementary evaluation (final score 3), where it was only outperformed by AuthorOne and the Accelerator. Notably, it did not introduce any “neutral” changes in the text.

Final text summary

There were some improvements to the text, though there were also some errors introduced.

Qualitative assessment

a) corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

Ginger corrected two instances of missing spaces between words in the text but missed two other spacing errors. It also successfully corrected one instance of apostrophe misuse but incorrectly revised another (i.e., “cant’” was revised to “can’t’”). It corrected some instances of subject/verb agreement when the words were close together but overlooked instances where the subject and verb were separated by more than one word.

It was more consistent in correcting the capitalization of proper nouns and recognizing incorrect word usage (e.g., “to” was correctly revised to “two”).

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

Ginger’s revisions were fairly minimal, and so it did not risk changing the meaning of any words or phrases.

c) Are “neutral”/unnecessary changes applied consistently?

No changes of this type were noted.

d) Do any changes negatively affect the readability or flow in the wider context?

The only change made that significantly affected readability was the incorrect addition of a definite article before “Polyphemus’ cave.”

Antidote (October 2020)

Test summary

Antidote performed well in the core evaluation with a score of 15 (one below Grammarly). It had the third-highest number of error corrections overall (19). It also offered more additional improvements than any other tool in the supplementary evaluation as well, though these were countered by unnecessary changes for a final score of -1. Antidote’s core evaluation in particular demonstrates its usefulness.

Final text summary

The text was significantly improved by Antidote’s corrections. Notably, it was the only tool to correctly solve the unpaired quotation mark error around the word “Nobody.” Other errors were introduced, and incorrect solutions negatively impact the text.

Qualitative assessment

a) Were corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

Corrections were generally consistent; all spacing errors were identified and corrected, and subject/verb agreement was enforced throughout. Capitalization of proper nouns was also introduced consistently. However, Antidote struggled to correct subject/verb agreement errors where the subject and verb were separated from each other (e.g., “The most important qualities of Odysseus that allows them to escape, however, is his intelligence and crafty resourcefulness”).

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

There was one instance of a revision changing the meaning: The poem reference “9.210” was treated as a standard number, and so the tool incorrectly changed the period to a comma. The tool also revised terms and phrases in quotations, which is not recommended, and changed an uncontracted form to a contracted form, which is not typically permitted in formal writing.

c) Are ‘neutral’/unnecessary changes applied consistently?

Neutral changes were applied consistently, which had both positive and negative effects. For instance, every single use of a “him” or “her” pronoun was flagged, and gender-neutral alternatives were suggested, even though all of these instances referred to specific people and were thus correct.

d) Do any changes negatively affect the readability or flow in the wider context?

The tool suggested that “in the first place” be revised to “first” in a context where the second option did not fit (i.e., “The quality of Odysseus and his men that primarily got them into this mess first is their reckless courage.”). Otherwise, the changes did not significantly impact readability.

Scribendi Accelerator 2.3.0.9

Test summary

The Accelerator performed very well in both the core evaluation and supplementary evaluation. In the core evaluation, it achieved a score of 25, a full 9 points higher than its closest peer (Grammarly). It also achieved the highest score of its peers in the supplementary evaluation (7). Finally, the Accelerator was the only GEC tool tested that did not introduce any errors or unnecessary changes into the sample text.

Final text summary

The text is substantially improved after implementing the suggested revisions. As with the other tools tested, many errors remain in the text; however, unlike the other tools, no additional errors or unnecessary changes were introduced.

Qualitative assessment

a) Were corrections made consistently? Are there instances of errors that were corrected in one sentence but not detected in another?

The Accelerator generally corrected subject/verb agreement errors, spacing errors, and article usage errors consistently. It also did fairly well with correcting plural forms. However, it was less consistent in correcting errors in comma usage (3 instances corrected, 2 missed), apostrophe usage (1 instance corrected, 1 missed), and capitalizing proper nouns (1 instance corrected, 2 instances missed).

b) Are sentence-by-sentence corrections suitable within the wider context of the paragraph? Did any corrections potentially change the intended meaning as suggested by the context? Are there instances where the use of specialized terminology was made inconsistent/incorrect?

No potential issues were noted; most changes were minor enough to not risk changing the meaning or negatively affecting the text.

c) Are “neutral”/unnecessary changes applied consistently?

No changes in this category were noted.

d) Do any changes negatively affect the readability or flow in the wider context?

The only change that risked this negative impact was the incorrect solution to inconsistent quotation marks by changing ‘Nobody” to ‘Nobody”’, exacerbating the problem. Otherwise, the changes were not considered to negatively affect the text.

Discussion

The results of these tests indicate that in comparison to its peers, the Accelerator offered significant benefits to users in both correcting clear errors and suggesting improvements to writing. The low number of incorrect solutions and errors introduced in the test suggests that using the Accelerator is a relatively efficient process that, relative to other tools, does not require much additional time to sift through suggested changes and determine which are valid.

Additional insight into the overall performance of the Accelerator can be garnered by comparing its specific methods of error correction to those of one of its peers. In this section, the Accelerator’s performance in correcting specific chunks of text will be compared to Grammarly’s performance in correcting the same chunks.

Comparing the Scribendi Accelerator and Grammarly (March 2020)

In comparing the respective error corrections of the Scribendi Accelerator and Grammarly in the test document, there are 18 points of overlap. Of these, there were 14 instances where the Accelerator and Grammarly corrected the same errors in an identical way. Although, these 14 instances are not considered relevant to the purposes of this comparison and will therefore not be discussed. The other 4 points of overlap, however, provide some insight into the method and performance of each tool in correcting specific chunks of text.

First, there is the following passage in the test document: “Indeed, they threat lot of freshwater fish species.” Grammarly offered the following two corrections to this text:

Applying both corrections results in the following text: “Indeed, the threat a lot of freshwater fish species.” The tool made corrections that targeted single words and phrases individually, which is effective in text that is generally well written. In text that contains multiple errors, however, the method’s efficacy is somewhat lower.

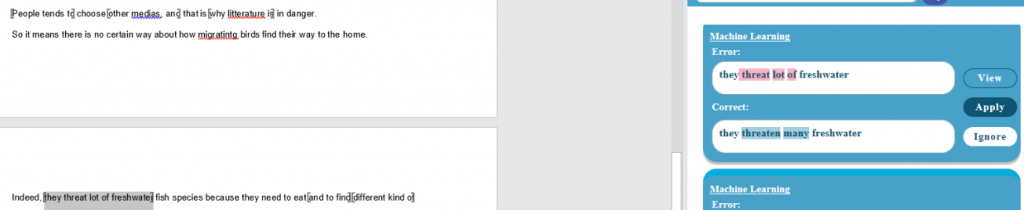

The Accelerator offered the following solution to the same chunk of text:

Applying the Accelerator’s solution results in the following text: “Indeed, they threaten many freshwater fish species.” Notably, the Accelerator combined multiple error corrections into a single suggestion that results in grammatically cogent text. Furthermore, instead of simply revising “lot of” to “a lot of,” the Accelerator improved the text further by revising it to the less informal “many.”

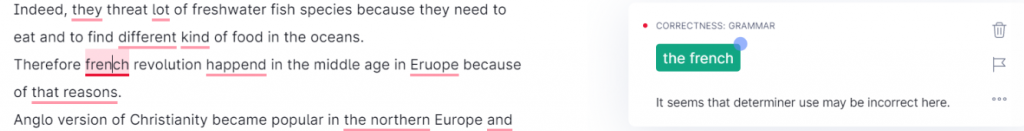

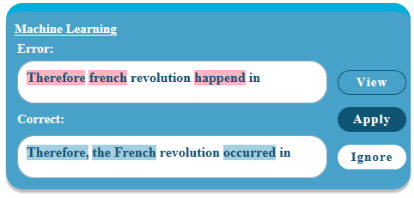

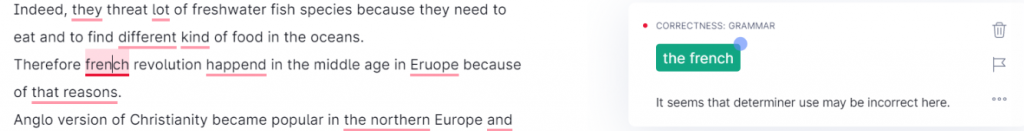

Second, there is the following text chunk from the test document: “Therefore french revolution happend.” Grammarly offered the following solution:

With the additional spelling correction, implementing these changes results in the following text: “Therefore the french revolution happened.”

The Scribendi Accelerator offered the following solution:

Again, we see that the Accelerator can implement multiple corrections and improvements to writing in a single suggestion. Like Grammarly, the Accelerator corrected the most noticeable error—the missing definite article. It furthermore corrected the capitalization of the proper noun French, though it did not recognize that “revolution” should have been capitalized as well in this context. In addition to these corrections, it also suggested two improvements: a comma following a short introductory phrase (as recommended by the Chicago Manual of Style) and rewording “happened” to the more formal “occurred” (note that, as explained previously, simple spelling error corrections are not considered grammar error corrections for the purpose of this evaluation).

There was also one instance where Grammarly offered an incorrect solution and the Accelerator offered a correct solution. Instead of recognizing a misplaced apostrophe, Grammarly suggested the following revision:

The Accelerator instead offered the following solution:

Through deep learning, the Accelerator is able to consider the error in the context of the sentence and suggest a revision based on what the most common solution would be.

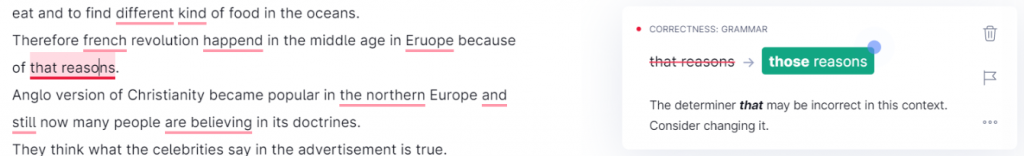

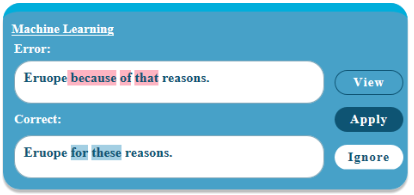

The final point of overlap reveals a more minor divergence. Grammarly corrected the incorrect text “because of that reasons” to “because of those reasons,” while the Accelerator corrected it to “for these reasons.” Though different in their extent, both corrections are valid.

Grammarly

The Scribendi Accelerator

From these comparisons, it is clear that the Accelerator is particularly effective and efficient in correcting chunks of text containing multiple errors compared to its leading peer. Additionally, by combining multiple corrections and improvements into single suggestions, the Accelerator reduced the amount of time the user needs to spend revising the suggested corrections to determine their suitability. This makes the tool especially suited to professional editors, who are often looking to maximize their efficiency and would likely not prefer to use a GEC tool that would take up too much of their time.

Conclusion

This evaluation compared the performance of Scribendi’s GEC tool, the Accelerator, with five similar tools on the market. The results demonstrate the value that the Accelerator offers to users and particularly to professional editors. In the tests, the Accelerator not only identified a greater number of errors compared to its peers but also offered a substantial number of improvements to the writing, while introducing no errors and offering no unhelpful/unnecessary suggestions. The relatively short size of the sample document somewhat limited the generalizability of the quantitative evaluation, but the Accelerator’s performance metrics compared to those of its peers are notable nonetheless.

In comparing specific corrections with those of its peers, the Accelerator also exhibited relatively high proficiency in correcting chunks of text that contain multiple errors. The Accelerator’s method of combining multiple corrections and improvements into single suggestions significantly increases the efficiency of using the tool—a quality that many editors will no doubt appreciate when attempting to optimize their workflow.

Overall, the Accelerator’s use of deep learning and its basis on a dataset derived from Scribendi’s editors allows it to approach texts similarly to human editors. The Accelerator continues to undergo development and refinement, and further improvements to performance are anticipated.

Feel free to leave a comment below; we would love to hear your opinions.

Try the Accelerator for Free

The Scribendi AI has a 1-month free trial and is currently available to download through the website.

Try Scribendi AI for FreeAbout the Author

Daniel Ruten is an in-house editor at Scribendi. He honed his academic writing and editing skills while attaining a BA and MA in History at the University of Saskatchewan, where he researched institutions and the experiences of mental patients in 18th-century England. In his spare time, he enjoys producing music, reading books, drinking too much coffee, and wandering around nature.